by

Praveen Asthana

Data centers are the compute factories of the modern information economy (as well as our social media addiction) and they are extremely energy intensive. To take one example, Facebook used a total of 7.17 million megawatt-hours of electricity in 2021, a 39% increase over 2019. Of that, 97% was for data centers, and the rest was for its offices. Taking this to national level, it is estimated that data centers in the United States consume around 2% of the country’s electricity, while European data centers consume upwards of 3% of the continent’s electricity.

In absolute terms this is a lot of energy being consumed and notable at a time when there are

widespread concerns about energy shortages and global warming. But an even bigger issue is

that a lot of the energy consumed in data centers, indeed as much as 40%, goes towards cooling the hot servers in the data center. This energy is overhead and is simply wasted.

No one likes overhead.

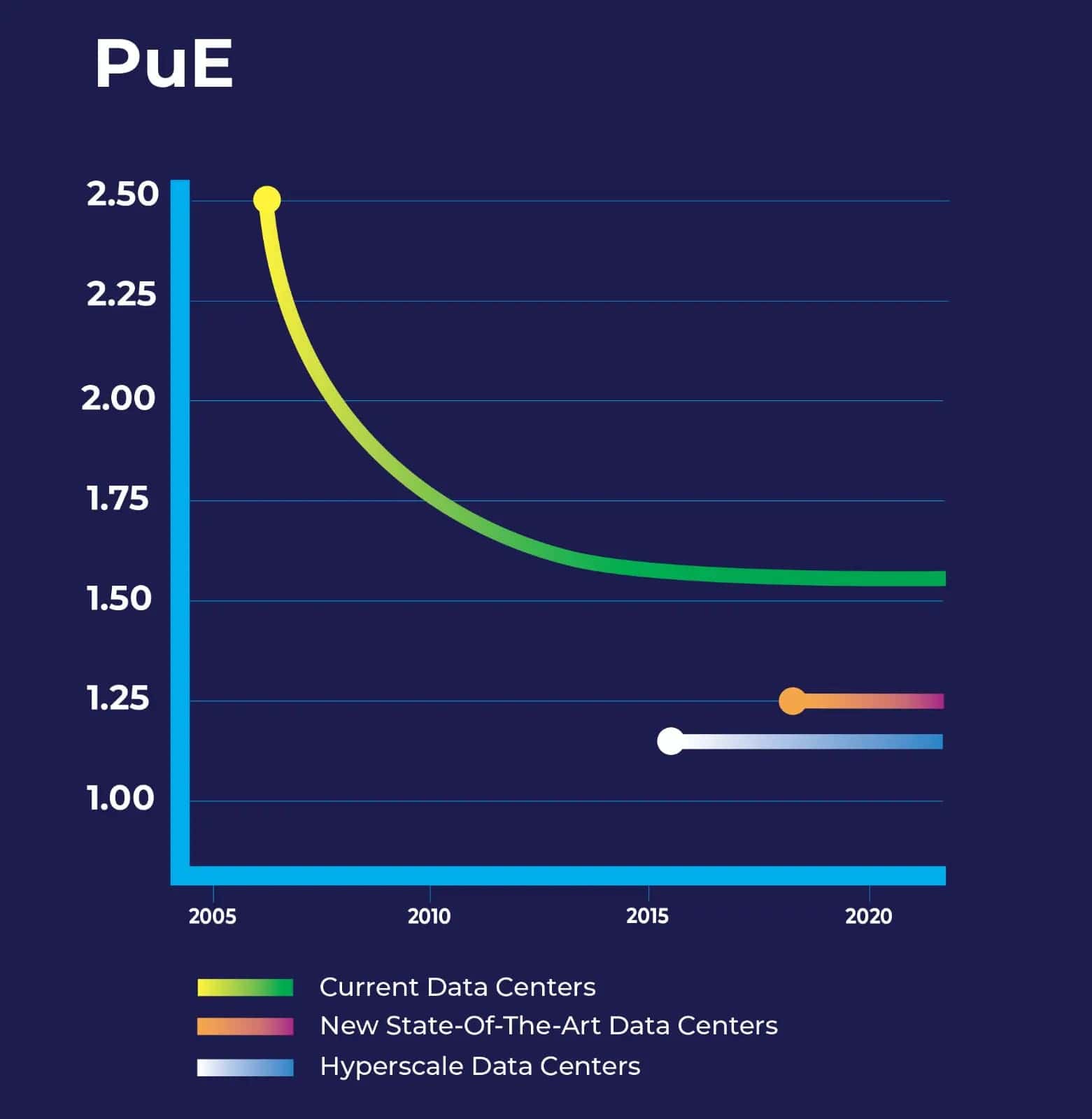

The data center industry has taken active steps to reduce the energy overhead over the last

decade. One measure of energy overhead is PuE which is a ratio of total energy used to

productive energy used. A ratio with a value greater than one implies overhead. A decade ago,

data center PuE was over 2.0, which meant that 50% of the energy was wasted. Since then,

through a concerted effort, that number has come down from its highs to about 1.57. However,

further efficiency gains seem to have flattened and the average PuE has been largely unchanged for the last five or so years, as shown below.

It is certainly possible to do much better than the average and some new data center designs are hitting a PuE of nearly 1.25.

These data centers are designed with optimized:

- Rack layout with physical hot/cold aisle containment so that hot air is guided away.

- Thermal management systems with precise and efficient mechanical control systems that can dynamically guide cold air to hot spot These systems rely on sophisticated energy algorithms which couple server-level data with building controls systems. This allows for more precise and efficient mechanical control systems.

Note that it is difficult and expensive to retrofit running data centers with new thermal management without extensive downtime (months). Hence many of these improvements are limited to new build data centers.

Hyperscalars like Google and Facebook have taken thermal management even further and achieved PuEs of around 1.15. They have accomplished this by using specially redesigned servers, maximizing server utilization, and data center level changes such as using free air cooling and direct evaporative systems instead of central air conditioners. But all these PuE improvements are at risk with the next generation of chips.

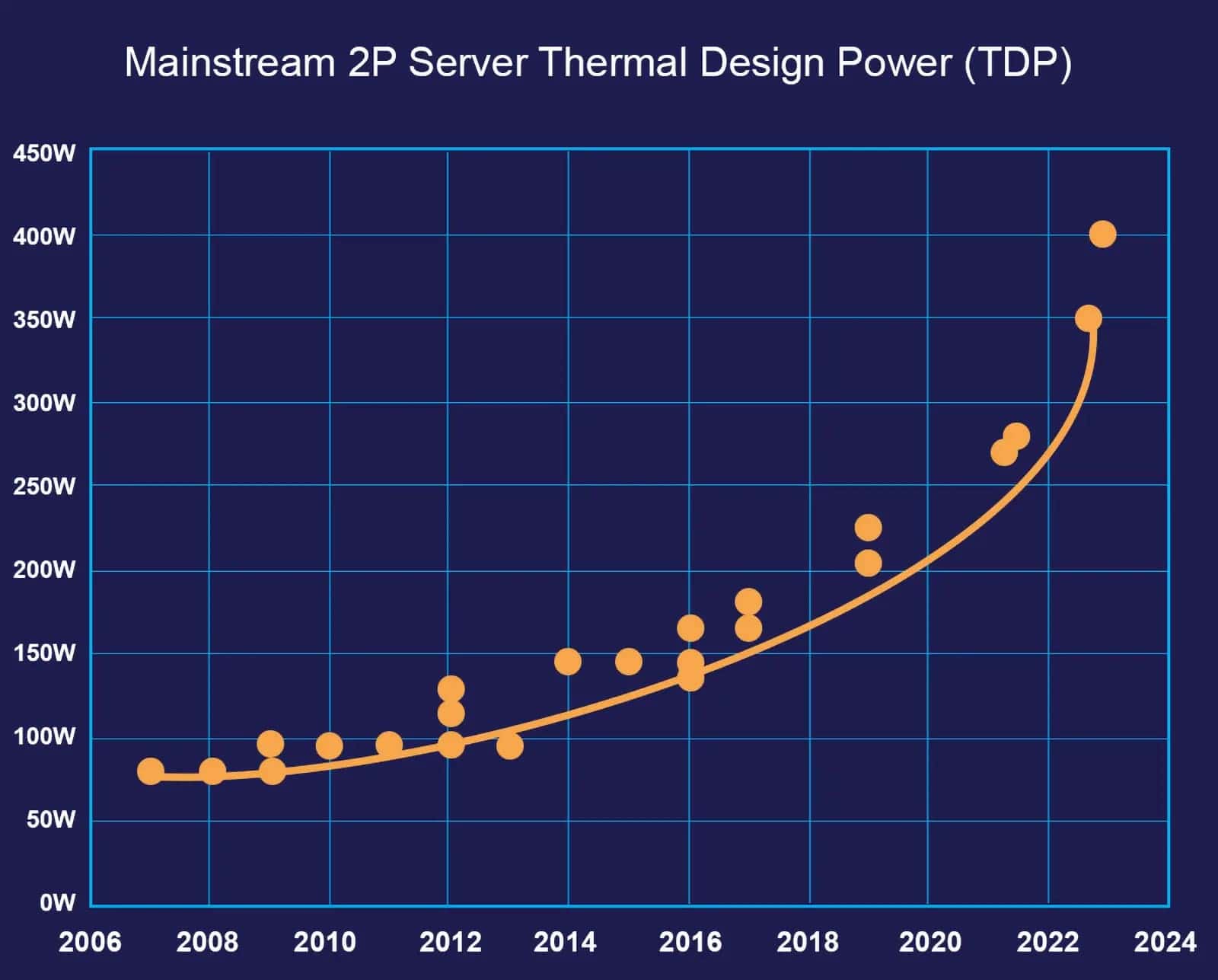

There is a locomotive coming in the form of exponentially rising heat densities from CPU chips

as shown below (Figure courtesy of AMD).

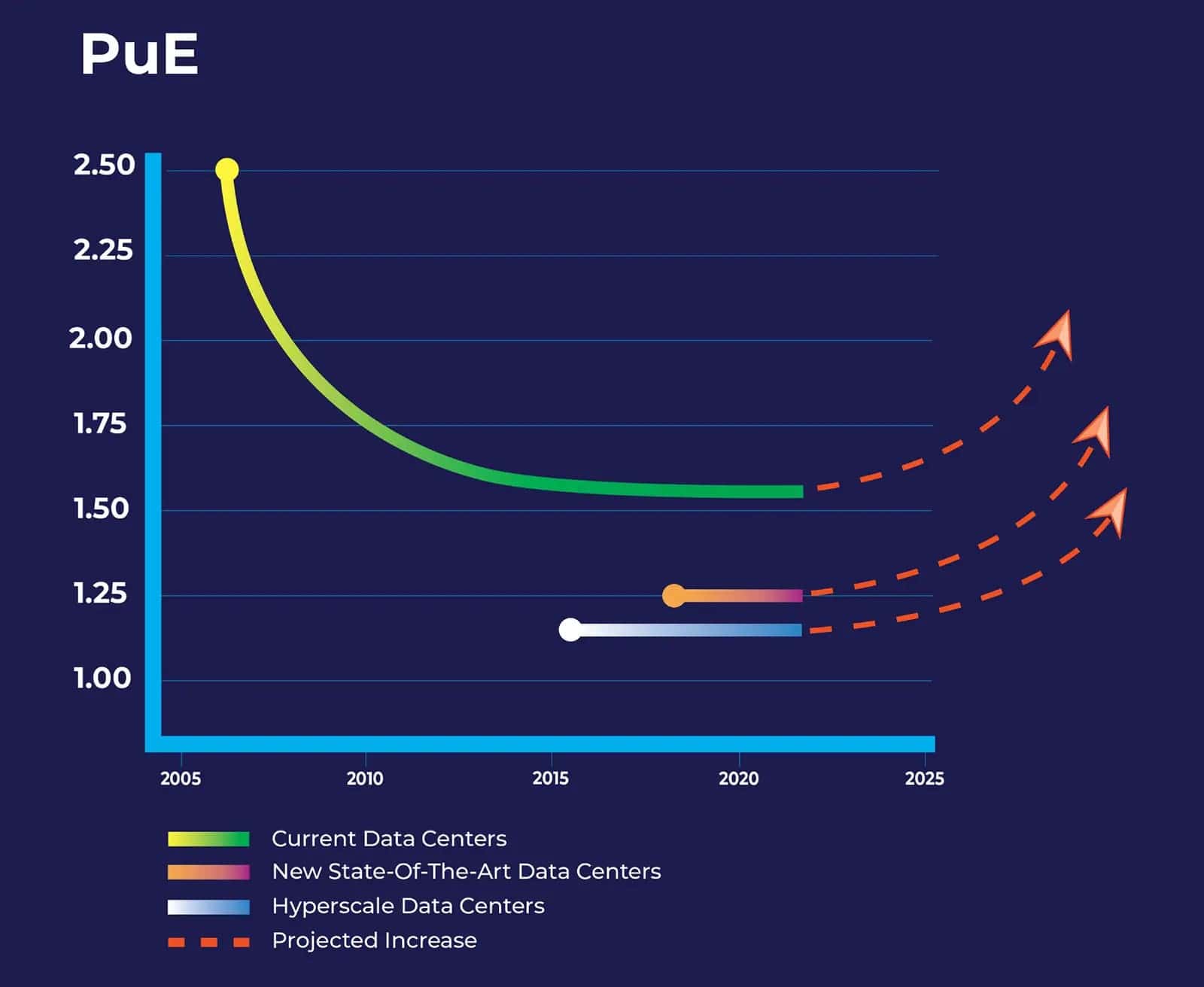

Server heat density was rising relatively slowly in the last decade which allowed companies to focus on data center improvements to reduce PuE. But with server heat densities expected to rise significantly going forward, average data center PuE will almost certainly start rising again, regardless of whether this is a state of the art datacenter or even a hyperscale data center.

So, how can you prevent this inevitable increase in PuE driven by the expected increase in server heat density?

One option is to depopulate server racks, i.e. put fewer servers in each rack so that existing data center cooling solutions can still be effective. But the problem here is that you lose compute density with this approach, so much so that you negate the benefits of the faster processors.

Another option is to implement liquid cooling for servers. At the recent Open Compute Conference (OCP 22), Facebook announced that it is planning to implement direct to chip liquid cooling in its data centers. Liquid cooling is orders of magnitude better at heat transfer than air and can effectively cool servers in a targeted fashion.

By implementing liquid cooling, Facebook hopes to:

- Further lower PuE immediately.

- Future proof the data center to accommodate rising server heat density driven by future generations of chips

- Obtain power headroom to ensure that they can fit within the power envelope of their data centers even as individual server heat density increases

Note that any data center can see similar benefits by implementing direct liquid cooling today.

And this isn’t just for greenfield data centers: Indeed, even existing data centers can dramatically lower PuE to as little as 1.15 by implementing direct to chip liquid cooling at the rack level, with only localized (rack level) downtime.