by

Praveen Asthana

AI is revolutionary, but it is also hungry.

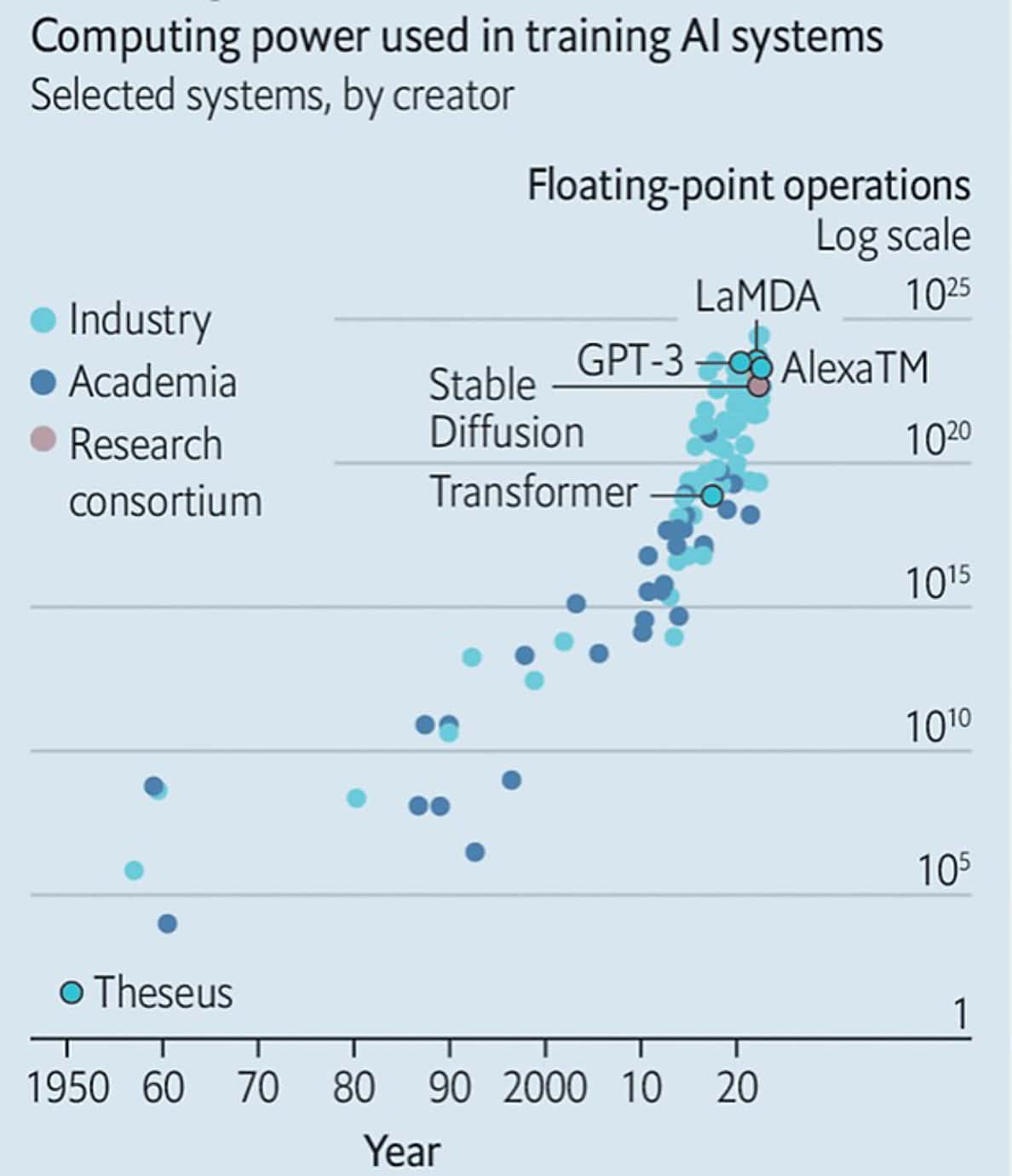

Enterprise applications using artificial intelligence are rapidly being developed in all fields. Indeed, 61% of CIOs polled by Salesforce.com expect to implement AI-based applications in their enterprises. But AI is computationally intense, and the required computing power has been exponentially growing and is expected to continue doing so as AI models become more powerful.

The computational intensity of AI is what makes AI hungry for increasing amounts of IT infrastructure required to support the compute. This includes power, thermal management, data center space, and water. However, the increased requirements battle with a number of serious constraints that data centers are experiencing, as shown in the figure below.

Growing AI infrastructure needs are banging up against these constraints and causing headaches for IT staff and data center operators.

Fortunately, adopting liquid cooling can help data centers break through these constraints. We examine each in turn.

Lack of Data Center Power

This has become the #1 issue for current data center expansion and for building new data centers. Data centers have long been known to be power hungry, accounting for about 3% of global energy usage. As AI pushes this energy demand even higher, data center operators are often finding that they cannot obtain more power from the local electrical grid. This impacts both expansion of current data centers as well as development of new ones. According to the leading commercial real estate services firm, CBRE, “The chief obstruction to data center growth is not the availability of land, infrastructure, or talent. It’s local power.” As an example, in Ireland the state electricity grid authority, EirGrid, called a halt to plans for up to 30 potential data centers after limits to data center construction were put in place until 2028.

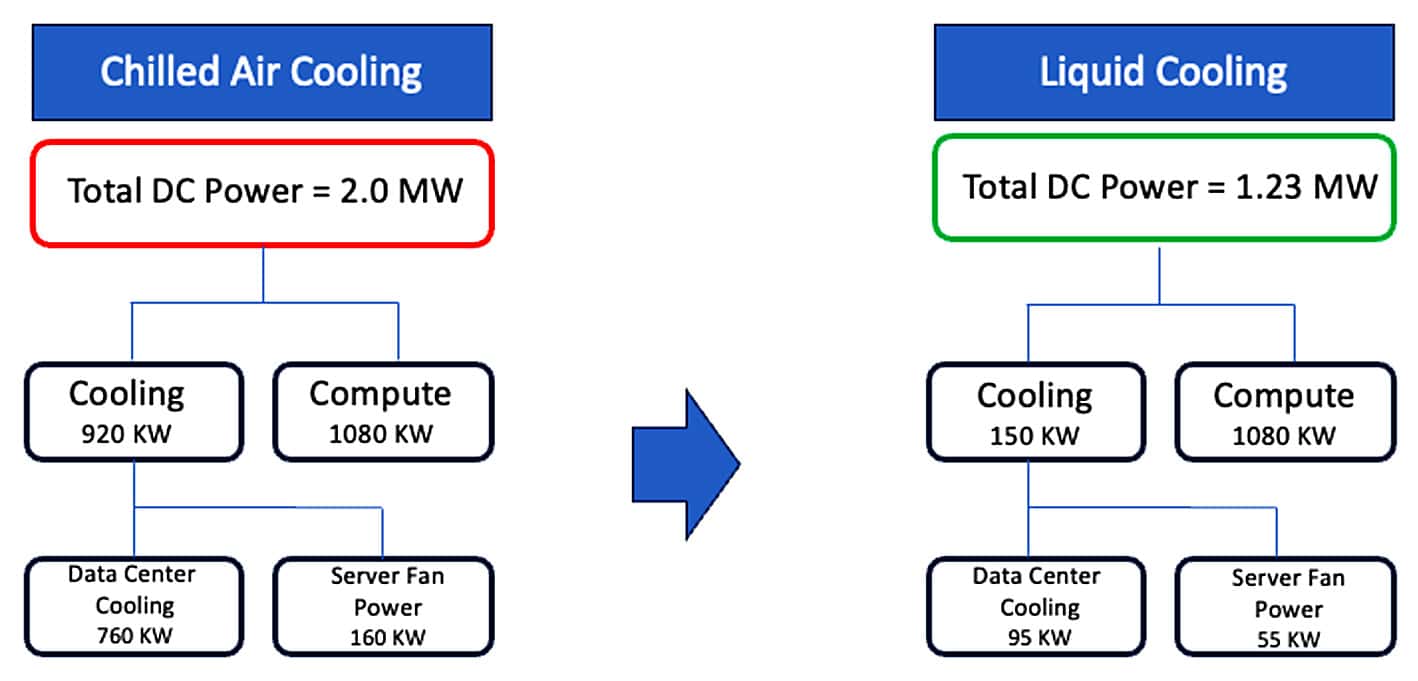

Data centers have a power usage efficiency (PuE) of about 1.57 on average, which means that approximately 37% of energy used by the data center goes on infrastructure overhead (lighting and cooling) of which the great majority (about 90%) is spent on cooling of the data center. The primary driver of heat in the data center are the hot CPUs in the servers. Using direct-to-chip liquid cooling instead of traditional air cooling can lower the PuE to about 1.08 and increase the amount of power available for pure compute by 70%.

Space Limitations

In air cooled data centers, one technique used by operators to manage the increased thermal density of high-poweredAI servers is to try and spread them out both within a rack and across multiple racks, i.e., reduce the number of servers within a rack so that the existing chilled air cooling system can still accommodate the hotter servers. However, there are two issues with this approach:

- Data center space comes at a premium and it is limited. Once a data center is built, it has a fixed amount of space that can accommodate a limited amount of server racks. Reducing the server density within a rack means that the total number of servers that a data center can hold is reduced. This is in direct conflict with the demand for more computational power in the data center.

- AI computational algorithms often cannot tolerate latency between computational units. Spreading servers and other IT equipment increasing the physical distance between them can impact the fidelity of the AI computations.

Direct-to-chip liquid cooling brings targeted thermal management to the hot chips within servers and thus allows for high density server configurations within a rack. This maximizes utilization of the rack and the data center floor space and enables operators to increase the computational power of their data centers (by upgrading the servers to more powerful ones) while maintaining density.

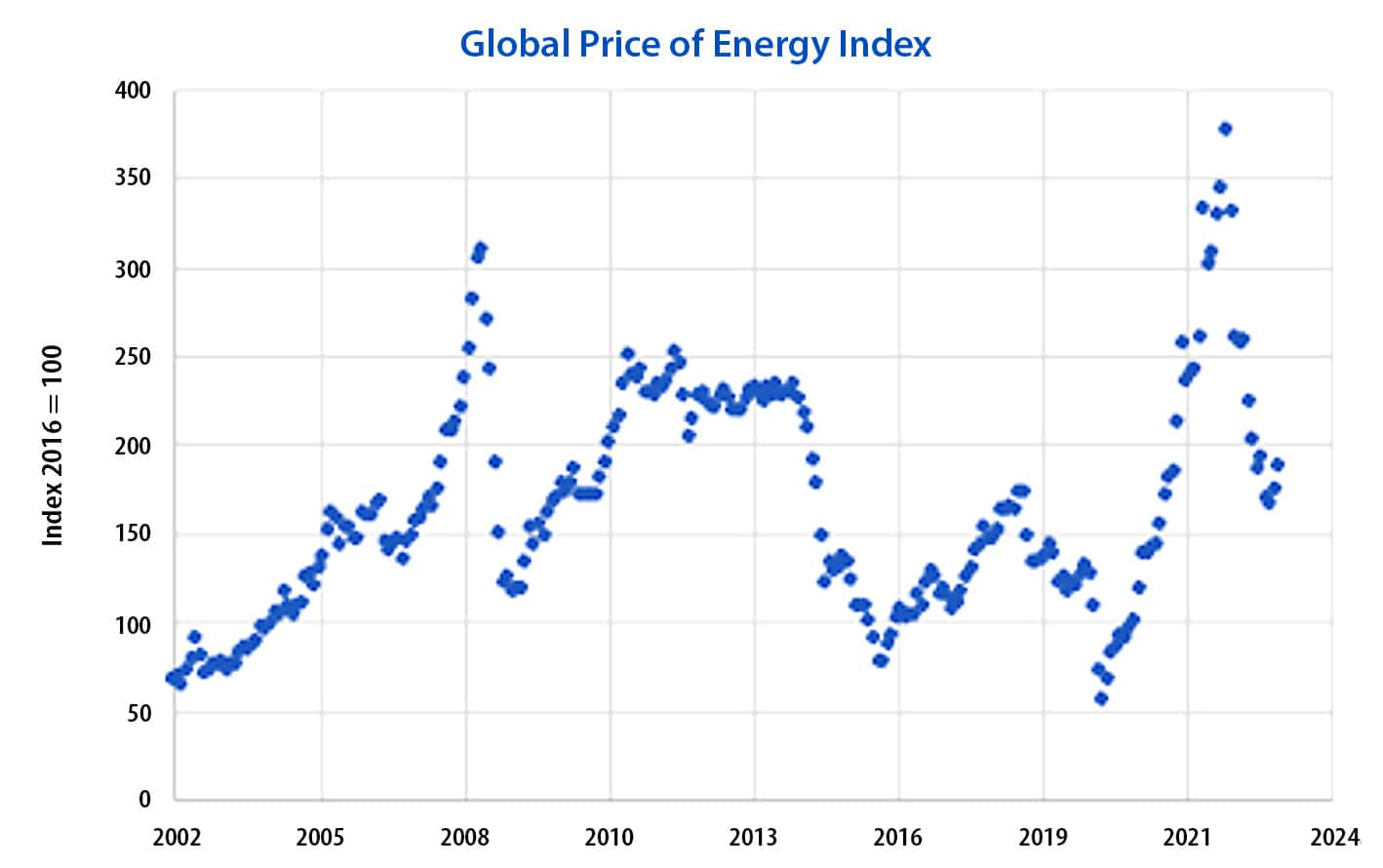

Budget Impact

Servers utilized for AI are extremely expensive, not just from an acquisition cost but also the ongoing operational expense. A large part of the operational expense is the cost of energy required to cool the servers, and this is becoming a headache for data center operators and CFOs as the cost of energy has been rising significantly and becoming more volatile. This can drive unplanned higher OPEX which exceeds budgets, or lead to significant volatility in OPEX which is a nightmare for CFOs.

Using direct-to-chip liquid cooling reduces the amount of energy dedicated to cooling servers by over 40% on average and thus materially reduces the impact of higher or fluctuating electricity prices on data center OPEX.

Excessive Water Usage

Data centers use significant amounts of water in their cooling systems, resulting in the loss of millions of gallons of potable water evaporated into the atmosphere. According to the US Department of Energy, data centers use up about 2 liters of water per kWh for cooling. In 2014, the total water usage by US data centers was over 155 billion gallons of water. Since then, water usage has only grown, driven by the growth in the number of data centers and in the thermal footprint of each of these data centers due to higher powered servers. Now, just 3 US companies (Microsoft, Google and Meta) collectively use more than twice as much water in their data centers as the entire country of Denmark. AI implementations are extremely water hungry: researchers at the University of Texas, Arlington estimated that Microsoft uses about 700,000 liters of clean water to train GPT-3.

At a time when clean water is a scarce commodity globally, and climate change threatens water supplies in even western countries, this loss of water as overhead for compute is disappointing.

However, by using direct-to-chip liquid cooling, we can significantly reduce the amount of evaporated water needed for server cooling. Indeed, the water use reduction directly tracks the improvement in PUE due to liquid cooling. In the example above comparing chilled air cooling to liquid cooling, the energy needed for data center cooling drops by 49% (from 640 kW to 460 kW). The amount of water used will drop by the same percentage.

CO2 Emissions

Many US and European companies are now required to report their carbon footprint and a majority have made public commitments to reduce their emissions. Data centers are a material contributor to global CO2 emissions, accounting for more than 3% of the total global emissions, which is more than the global aviation industry (2.5%). New AI implementations are driving these numbers even higher.

By switching to liquid cooling, companies can significantly reduce the carbon footprint of their data centers in proportion to the reduction in energy required for cooling. In this scenario, the reduction is 38%.

Conclusion

Adopting direct-to-chip liquid cooling can help company executives and data center operators break through the current limits of data center economics to truly enable AI implementations at scale.