by

Josh Claman

To say Chief Information Officers have a lot on their plate is an egregious understatement. With their focus spanning several crucial areas, such as integrating business applications, staying ahead of the AI curve, bolstering cybersecurity defenses, and striking the right balance between cloud, colocation, and on-premise workloads, it’s a tough job that continues to become tougher. Amidst these pressing priorities, data center cooling may appear to be a less critical, even mundane issue. However, examining the evolving requirements of data centers and considering environmental, financial and infrastructural challenges and trends underscores the necessity for data center cooling to occupy a place on the CIO’s agenda.

“Today’s outstanding CIOs know that mastering technology alone isn’t a guarantee of success in tomorrow’s world.” – Forbes

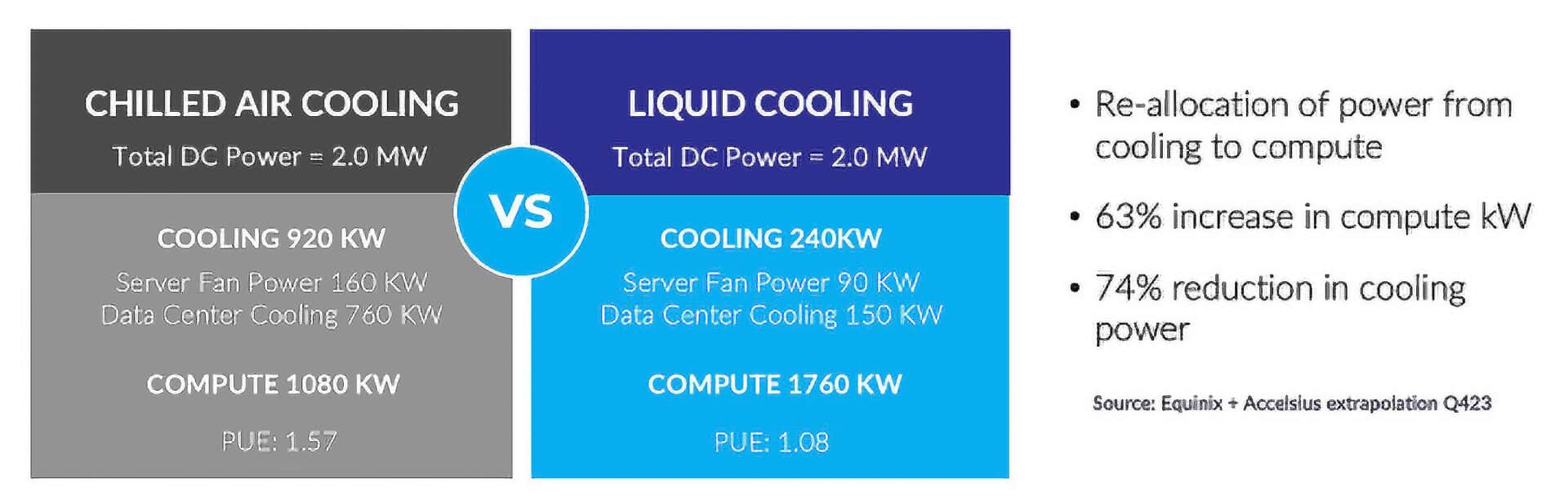

Imagine running a manufacturing facility and receiving a report at the end of the quarter stating that half of the energy used did not contribute to producing goods but was instead lost to inefficiencies. In any company, reducing this waste would become a priority. This scenario is akin to the current state of air cooling in data centers. Air cooling is remarkably inefficient, with 30-50% of power allocated to cooling processors rather than data processing. In any other business sector, no one would tolerate a substantial waste of energy, so why is this the norm in data centers? Many CIOs are unaware of these inefficiencies or have been told that more efficient options do not exist.

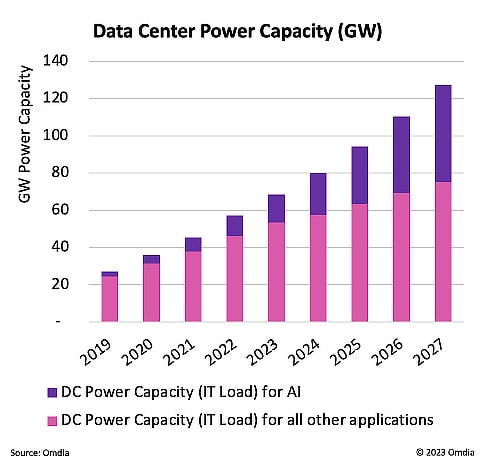

The number one obstacle in current data center expansion and building new data centers is the lack of power. Power isn’t just an operational requirement; it is a strategic asset. Data centers consume 3% of global energy, so the immense scale of their energy demand is evident. As local power grids are spread thin, grappling with aged infrastructure and the integration of renewable technologies, many requests to secure power are being rejected. In fact, the growth rate of data center investment is expected to be as high as 27% YoY, but most believe this would be 2 to 3 times higher if power were readily available.

An article published by Salesforce.com revealed that over 60% of CIOs expect to implement AI-based applications in their enterprises. As we push the boundaries with denser AI workloads, the limitations of air cooling become glaringly apparent. These highly dense workloads generate heat that air cooling systems increasingly struggle to manage. Jensen Huang, the CEO of NVIDIA, predicted that $1 trillion will be spent over the next four years to upgrade data centers for AI. AI models may require racks that are as much as seven times more power-dense than traditional workloads. The power consumption of these GPUs will be roughly equivalent to that of the Netherlands. In fact, 30% to 40% more power must be allocated to cool these processors unless a more efficient cooling architecture can be adopted. Consequently, the companies stuck inefficiently allocating energy to cooling their data centers will be forced to sacrifice compute for cooling. It is no longer sufficient to secure power; the appropriate allocation of that power is becoming a strategic and tactical advantage.

Adoption of AI Chart and average rack power densities.

An “At a Glance” Guide to Emerging Liquid Cooling Options

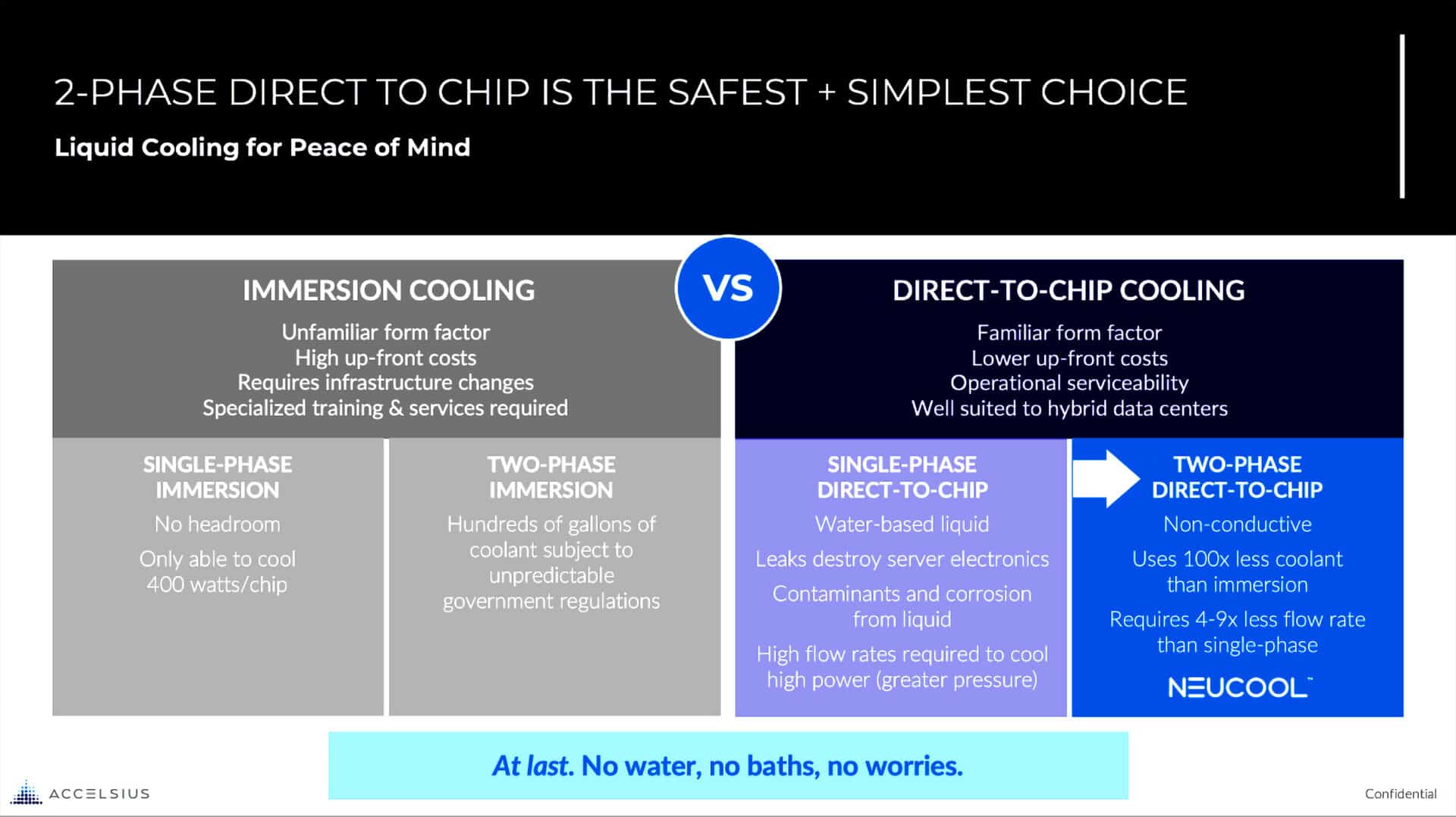

The universe of liquid cooling is complex, but the infographic below categorizes the major types of liquid cooling (immersion and direct-to-chip) and explains the differences between single-phase and two-phase within these essential groupings.

Immersion

The immersion method involves placing IT equipment completely into a non-conductive liquid, which absorbs heat more effectively than air. The largest benefit of immersion cooling is its ability to remove 100% of the heat generated by IT components. However, for IT teams considering immersion cooling, there are critical factors to weigh. A primary concern is its incompatibility with existing infrastructure. Immersion cooling typically calls for a complete overhaul of current setups, requiring negotiated warranty relationships with the OEM vendors and integrators and is not compatible with most legacy systems. This requires specialized training and services, further boosting costs.

Single-Phase, Direct-to-Chip

The liquid cooling market is nascent, with its share of the total data center cooling market still in the low single digits. So, without entrenched technologies, there remains a great deal of freedom to choose the optimal technology. The most common liquid cooling method in data centers is simply pumping water through “cold plates” positioned over the processors, commonly called single-phase, direct-to-chip. Despite its first-mover advantage, water cooling presents a range of challenges that warrant careful consideration. We all learned early on not to place a cup of water next to our computers for one important reason: Water and technology do not go well together, and this principle applies in the world of data center cooling.

Water poses a significant risk of causing damage to increasingly expensive IT equipment. This risk is not just theoretical; incidents of water destroying motherboards in data centers are well-documented. Importantly, there is also a practical limit to how much heat single-phase cooling can remove, governed by a linear relationship between the flow rate of water and the amount of heat it can dissipate. This ‘heat removal ceiling’ means that beyond a certain point, simply increasing water flow is untenable – pressures are too high, orifices are too small, and frictional corrosion to the cooling loop becomes a chronic issue. It’s crucial to note that another significant factor influencing cooling capacity is the temperature of the facility water loop. For high-performance AI/ML workloads, achieving enhanced cooling often requires increased water flow rates and pressure and chilling the water to temperatures significantly lower than the typical ASHRAE W32 or W45 levels. The American Society of Heating, Refrigerating and Air-Conditioning Engineers established these guidelines to set a standard temperature to balance equipment performance and energy consumption. Chilling water below ASHRAE 3 requires substantial amounts of additional energy, which contradicts sustainability and energy efficiency goals. The maintenance demands of water-cooling systems are non-trivial. Regular flushes are necessary to mitigate issues like particulate or biological contamination — all exacerbated at higher loop pressures. First mover may not, and often does not, equate to optimal technology.

Two-Phase, Direct-to-Chip

As IT teams explore the landscape of liquid cooling options, two-phase, direct-to-chip cooling emerges as a compelling choice. This method utilizes a dielectric (non-conductive) fluid, eliminating the risk of electrical damage to IT equipment. A key advantage of two-phase DTC is its high ‘heat removal ceiling,’ ensuring that even as chip wattages increase, the cooling system can keep pace, thereby protecting the longevity and reliability of IT investments. With chips quickly lapping at the 1000W mark, this is an essential consideration.

Furthermore, two-phase DTC systems have lower maintenance requirements than other cooling methods because they rely on dielectric fluid, alleviating the risk of bio-contaminants clogging the primary cooling loops. This reduction in upkeep simplifies operational demands and contributes to a lower total cost of ownership.

Importantly, this technology is also constructed to be compatible with existing legacy infrastructure, offering a seamless integration path for data centers looking to upgrade their cooling systems without a complete overhaul. Two-phase DTC, exemplified by systems like the Accelsius NeuCool System, supports maximum rack densification, enabling more servers per rack. This is a significant advantage for compute-intense systems, contrasting with less efficient air-cooling, which often necessitates gapped stacking within server racks. The space efficiency of two-phase DTC allows for re-allocation within the data center for planned expansions or alternative applications. For new data centers, this translates to reduced space allocations and, consequently, lower lease or occupation costs. This aspect of two-phase DTC, combined with its compatibility and cost competitiveness, positions two-phase DTC as a pragmatic and forward-looking solution for CIOs aiming to balance performance, cost-efficiency and ease of transition in their data center cooling strategies.

The Sustainability Angle

In the context of corporate responsibility, most companies now operate with Environmental, Social, and Governance (ESG) scorecards, making sustainability a key performance indicator. As the climate crisis progresses, the growing pressure of environmental responsibility will continue to influence business decisions made by CIOs. Additionally, a report published by the Harvard Business Review reveals the financial benefits of sustainability. The report discovered that companies that emphasized sustainability presented higher valuations by a margin of 20%, had lower capital costs, and consequently attracted more investors. In fact, Morgan Stanley revealed that 85% of US investors now express interest in sustainable investment strategies. When 3% of the world’s energy is dedicated to powering data centers, and 40% of that energy is specifically used not for processing data but for cooling these facilities, it becomes remarkably clear how unsustainable the current data cooling methods are.

As Kevin Brown from Schneider Electric writes, regulations are coming, and companies that prioritize resiliency, security and sustainability will “discover a competitive advantage by running their business more efficiently with less waste” (Forbes). By shifting towards more energy-efficient cooling methods, such as two-phase DTC, IT departments can transition from being energy-intensive to building a foundation for the coming demands of AI, actively contributing to their company’s sustainability goals.

Conclusion

One look into the predicted trends of AI should leave every CIO asking if they have the infrastructure to support it. A large part of this is assuring every megawatt is allocated efficiently. The insights presented reveal that when selecting a cooling method that is not only commercially viable but also aligns with sustainability goals, two-phase, direct-to-chip emerges as the standout choice. And we are not the only ones saying it. Industry analysts have identified two-phase, direct-to-chip cooling as the leading solution, and Lucas Beran of Dell’Oro Group notes, “There is a change unfolding…This has led to an upward revision of our liquid cooling forecast.” With its ability to enhance server density and performance while reducing energy and water usage, its blend of operational efficiency, ease of installation and maintenance, and environmental responsibility, two-phase, direct-to-chip emerges as the optimal choice for forward-thinking data centers.