by

Praveen Asthana

Most data center operators can’t imagine life without their chilled air systems. But this belief, however well rationalized, is actually a trap, consigning them to a life of increasing operational pain.

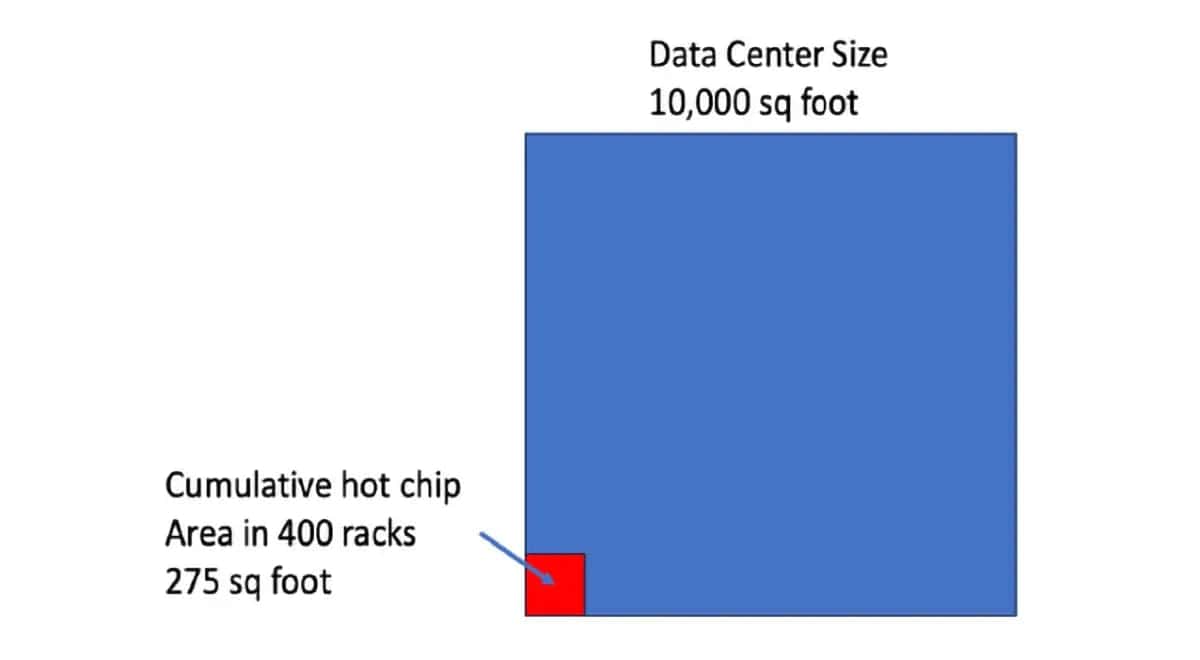

To begin with, chilled air systems are extraordinarily blunt cooling instruments. To understand just how blunt, consider that the primary heat generator in the data center is the CPU within each server. In a 10 thousand square foot data center containing 400 racks, there could be 16,000 CPUs needing cooling. Each CPU is 1,600 sq mm, so the total area of all the CPUs added up in the data center is 275 square feet. So, to cool this 275 square feet of heat-producing surface in the data center, you need to use an AC system cooling an area of 10,000 square feet. That is the very definition of blunt cooling.

Because chilled air systems are so blunt, a good deal of design and operational effort must be expended to bring about some reasonable operational efficiency. And this thermal design and operational tweaking needs to be ongoing because server and rack distributions within the data center change over time, because chips get hotter with successive server models, and because there is pressure to manage within limits of power budgets, data center space, ESG, and financial budgets.

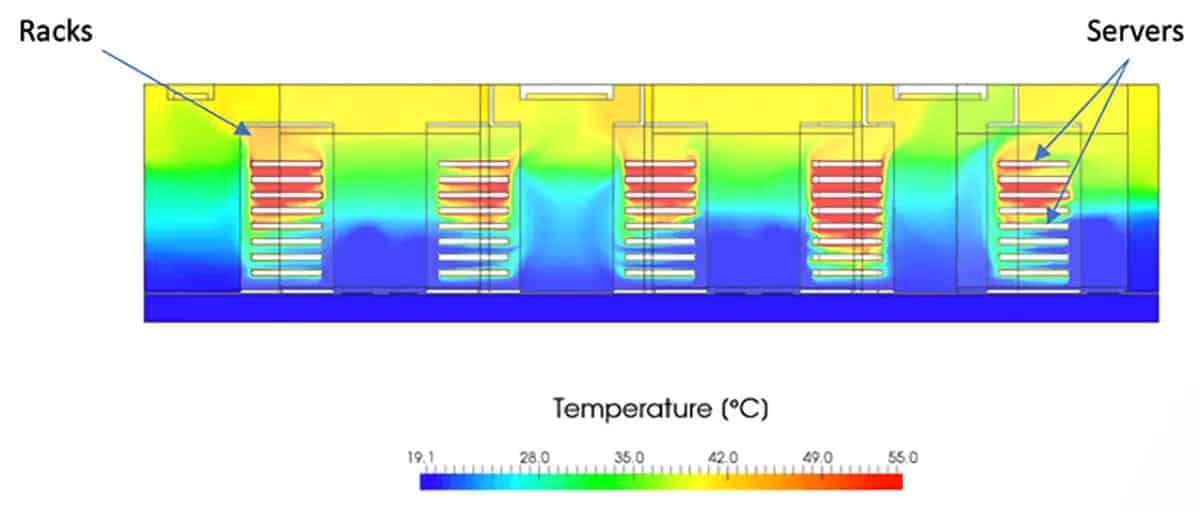

The primary design and operational complexity in a data center occurs because the heat is not evenly distributed at any level—at the floor space level, within a rack, and even within a server. Within a data center there will be some racks with hotter servers and this distribution will change over time. Even within a rack, there will be an uneven thermal distribution as shown below. Chilled air coming from the raised floor gets warmed by the lower servers and may be too warm to effectively cool the upper servers in the rack. Since most data centers don’t do thermal imaging of their racks, the only symptom of this might be that the upper servers are running throttled or have shorter life spans than the lower ones.

Those operators that realize this issue compensate initially by increasing the flow (CFM) of the chilled air (which is expensive) or make the air colder (which is also expensive). And an unintended consequence of using increasingly colder air is that the bottom servers may get so cold that there is danger of condensation which has the potential to cause electrical shorts. Can’t win!

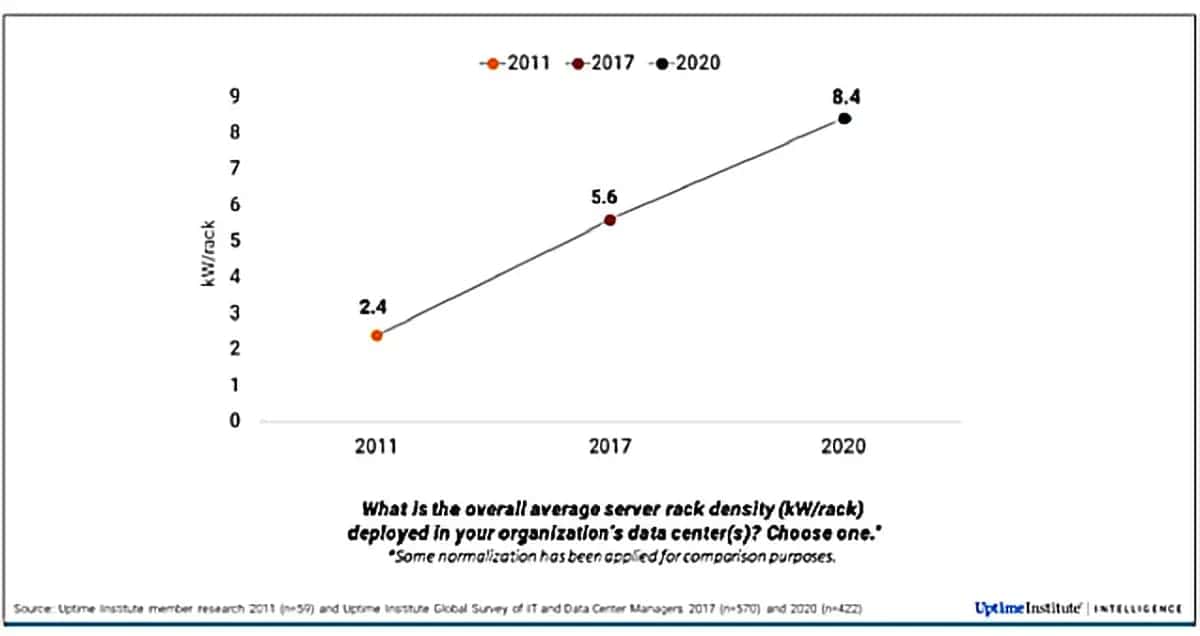

Rack thermal densities are a moving target. Over the last decade, average rack thermal densities have quadrupled as server CPUs have become hotter (roughly doubling in thermal power from 100 watts to 200 watts in this timeframe).

In response to these rising rack power densities, the data center HVAC industry has developed an increasing number of accommodative products, designed primarily to deliver more air, colder air or better targeting of the chilled air in the data center.

These include:

– Bigger, beefier AC systems

– Hot aisle containment

– Cold aisle containment

– Row-based air handlers and cooling systems

– Chilled water loops and larger cooling towers

– Increasingly colder water

– Rear door heat exchangers

Most of these require sophisticated data center level thermal CFD modeling to get right and even then, each come with their own cost and complexity. Take hot aisle and cold aisle containment systems for example: implementation of these can interfere with the fire suppression systems in a data center causing those all to be rethought.

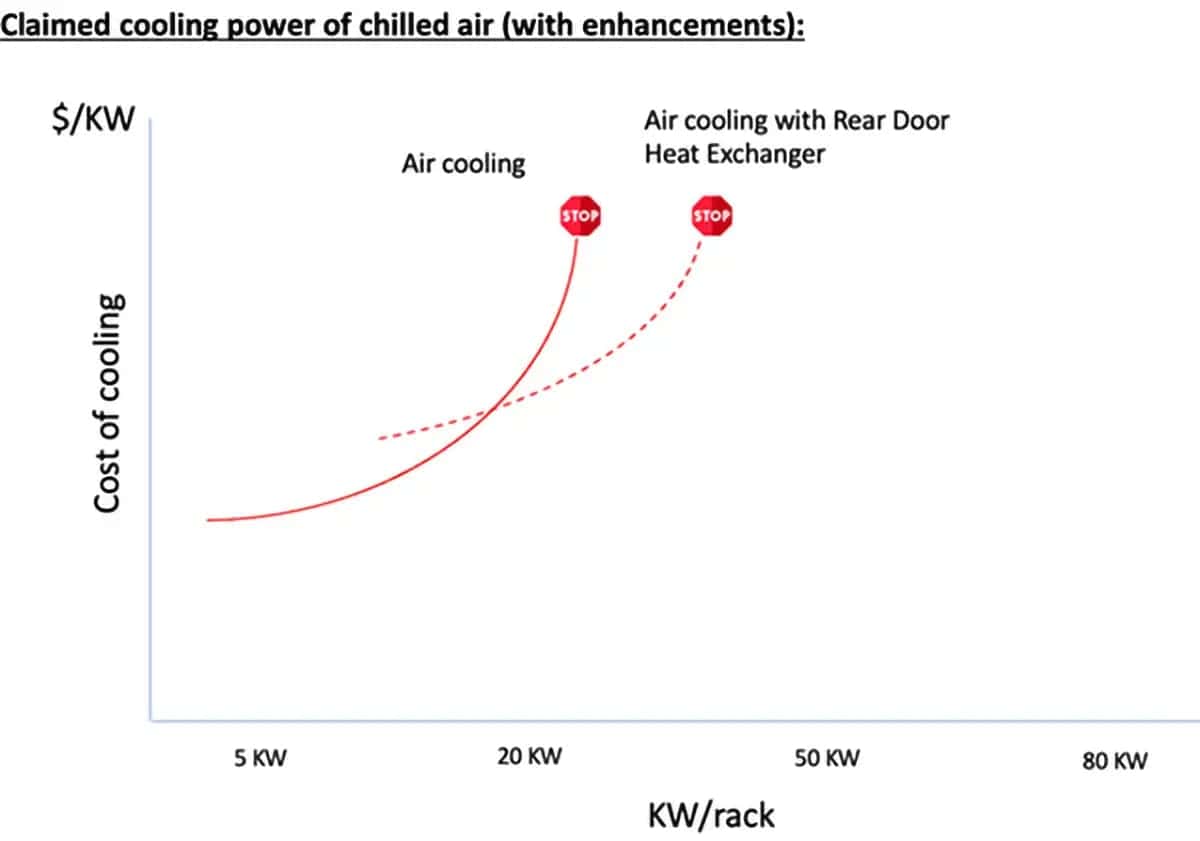

It is tempting to stick with AC systems as it’s the devil you know. But there is a downside of doing so. While, on paper, it seems possible that chilled air systems can manage higher rack thermal densities, the reality is this comes with a significant increase in cost and operational complexity as shown in the figure below. Alternatively, server performance is negatively affected through excessive CPU throttling which affects overall compute effectiveness in high workload applications. And as server CPU thermal power accelerates over the next few years to the 50KW/rack range, the cost and complexity of using air will simply become untenable.

At some point, kicking the can down the road with chilled air cooling leads to diminishing returns, exponentially greater cost, and a dead end. For this reason, many data center professionals believe the point has arrived where alternatives to chilled air cooling need to be seriously considered. We believe the best alternative is direct-to-chip liquid cooling because it is the most targeted way (and thus the most efficient) way to cool the hot chips in the data center. With direct-to-chip liquid cooling, instead of wasting cooling energy over a 10,000 square foot area to cool 275 square feet of chips, you just need to cool the 275 square feet of chips.